Important Dates

- Submission Deadlines:

- Notification of Acceptance: 6th of August 2020

- Camera Ready: 15th of August 2020

- Workshop Date: October 4th, 14:00-18:00 UTC

Best Paper Award

- 1st place: Siloed Federated Learning for Multi-Centric Histopathology Datasets by Jean Ogier du Terrail (Owkin, Inc.), Mathieu Andreux (Owkin, Inc.), Constance Beguier (Owkin, Inc.), Eric W Tramel (Owkin, Inc.)

- 2nd place: Inverse Distance Aggregation for Federated Learning with Non-IID Data by Yousef Yeganeh (Technical University of Munich) et al. and Fed-BioMed: A general open-source frontend framework for federated learning in healthcare by Santiago Silva (INRIA) et al.

- 3rd place: On the Fairness of Privacy-Preserving Representations in Medical Applications by Mhd Hasan Sarhan (Technical University of Munich) et al.

News

[October] DCL on MICCAI platform link. Looking forward to a great DCL workshop with you!

[August] The notifications of accepted papers are out! Please note that we need your posters and recordings until September 13th.

[July] Due to several requests, we decided to extend the submission deadline. Please note that the intention of submission still has to be entered into the CMT system until 9th of July 2020 and the full paper until 15th of July 2020. NVIDIA sponsors a Titan RTX for the DCL best paper award!

[June] Submission deadline has been updated. Please submit your paper here. All MICCAI workshops - including DCL - will take place virtually this year.

[May] MICCAI's workshop schedule has been announced. The DCL workshop will take place on October 4 in the afternoon.

[April] Our first keynote speaker is confirmed! Dzung Pham will talk about governance and ethics in the era of distributed learning.

[March] The DCL Workshop has been approved for MICCAI 2020

Distributed and Collaborative Learning

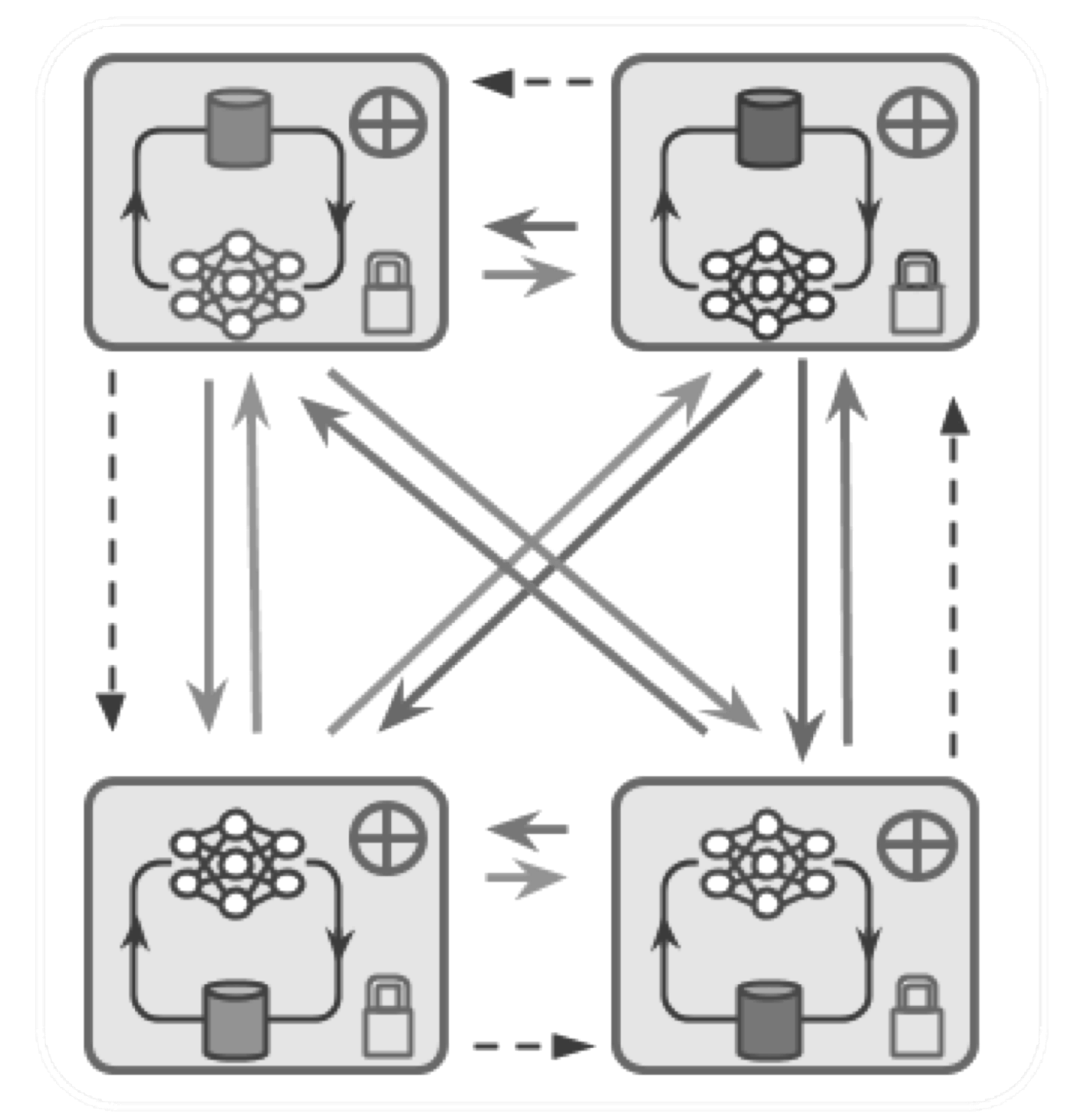

Deep learning empowers enormous scientific advances, with key applications in healthcare. It has been widely accepted that it is possible to achieve better models with growing amounts of data. However, enabling learning on these huge datasets or training huge models in a timely manner requires to distribute the learning on several devices. One particularity in the medical domain, and in the medical imaging setting is that data sharing across different institutions often becomes impractical due to strict privacy regulations, making the collection of large-scale centralized datasets practically impossible.

Some of the problems, therefore, become: how can we train models in a distributed way on several devices? And is it possible to achieve models as strong as those that can be trained on large centralized datasets without sharing data and breaching the restrictions on privacy and property? Distributed machine learning, including Federated Learning (FL) approaches, could be helpful to solve the latter problem. Different institutions can contribute to building more powerful models by performing collaborative training without sharing any training data. The trained model can be distributed across various institutions but not the actual data. We hope that with FL and other forms of distributed and collaborative learning, the objective of training better, more robust models of higher clinical utility while still protecting the privacy within the data can be achieved.

Call for Papers

Through the first MICCAI Workshop on Distributed And Collaborative Learning” (DCL) we aim to provide a discussion forum to compare, evaluate and discuss methodological advancements and ideas around federated, distributed, and collaborative learning schemes that are applicable in the medical domain.

- Federated, distributed learning, and other forms of collaborative learning

- Server-client and peer-to-peer learning

- Advanced data and model parallelism learning techniques

- Optimization methods for distributed or collaborative learning

- Privacy-preserving technique and security for distributed or collaborative learning

- Efficient communication and learning (multi-device, multi-node)

- Adversarial attacks on distributed or collaborative learning

- Dealing with unbalanced (non-IID) data in collaborative learning

- Asynchronous learning

- Software tools and implementations of distributed or collaborative learning

- Model sharing techniques, sparse/partial learning of models

- Applications of distributed/collaborative learning techniques: multi-task learning, model agnostic learning, meta-learning, etc.

Program

|

Time Starting (UTC) |

Time Ending (UTC) |

Session title and Summary |

|

14:00 |

14:05 |

Welcome and opening - Nicola Rieke |

|

14:05 |

14:45 |

Keynote 1 - Dzung Pham |

|

14:45 |

15:45 |

Oral Session 1: |

|

- Weight Erosion: an Update Aggregation Scheme for Personalized Collaborative Machine Learning | ||

|

15:45 |

16:00 |

Break with poster session (Oral 1 papers) |

|

16:00 |

16:40 |

Keynote 2 - Jayashree Kalpathy-Cramer |

|

16:40 |

17:40 |

Oral Session 2: |

|

- Inverse Distance Aggregation for Federated Learning with Non-IID Data |

||

|

17:40 |

17:55 |

Break with poster session (Oral 2 papers) |

|

17:55 |

18:15 |

Best paper award & Concluding remarks |

Keynote Session

Dzung Pham

National Institutes of Health

Topic: Governance & Ethics in the Rise of Distributed Learning

Abstract: Distributed learning techniques have been proposed to satisfy the increasing need for larger and more diverse training data sets while circumventing privacy concerns involved with traditional data sharing. However, such techniques have their own set of potential obstacles that must be surmounted to ensure an ethical and secure treatment of health information. In this talk, I will provide an overview of the current challenges and opportunities in adopting distributed learning in the medical imaging community. Approaches to adversarial attacks, differential privacy, and data encryption will be reviewed. Regulatory issues, standardization, and quality assurance will also be discussed with a focus on describing the major practical considerations in implementing distributed learning collaborations. The early steps of this growing area of research will likely play a significant role in shaping the future of artificial intelligence in medicine.

Jayashree Kalpathy-Cramer

Harvard Medical School and Massachusetts General Hospital

Topic: Collaborative Deep Learning-opportunities and challenges

Abstract: Deep learning has great potential in medical imaging but concerns about bias, model brittleness and generalizability remain. Training robust deep learning models requires large amounts of diverse, well-annotated patient data. However, imaging data cannot always be shared due to patient privacy concerns and lack of data sharing infrastructure. Collaboratively train deep neural networks on multi-institutional data without sharing patient data is an approach to ensure. Some technical approaches for collaborative learning including federated learning, cyclical weight transfer and split learning. There are a number of technical considerations in developing optimal methods for collaborative learning. We will discuss results from multi-institutional learning for breast density estimation in mammography using open-source frameworks.

Accepted Papers

Weight Erosion: an Update Aggregation Scheme for Personalized Collaborative Machine Learning, Felix H M Grimberg (EPFL); Mary-Anne Hartley (EPFL); Martin Jaggi (EPFL); Sai Praneeth Karimireddy (EPFL)

Fed-BioMed: A general open-source frontend framework for federated learning in healthcare, Santiago Silva (INRIA); Andre Altmann (Stanford University); Boris Gutman (Illinois Institute of Technology); Marco Lorenzi (INRIA)

On the Fairness of Privacy-Preserving Representations in Medical Applications, Mhd Hasan Sarhan (Technical University of Munich); Nassir Navab (Technical University of Munich); Abouzar Eslami (Carl Zeiss Meditec AG.); Shadi Albarqouni (ETH Zurich)

Siloed Federated Learning for Multi-Centric Histopathology Datasets, Jean Ogier du Terrail (Owkin, Inc.); Mathieu Andreux (Owkin, Inc.); Constance Beguier (Owkin, Inc.); Eric W Tramel (Owkin, Inc.)

Inverse Distance Aggregation for Federated Learning with Non-IID Data, Yousef Yeganeh (Technical University of Munich); Azade Farshad (Technical University of Munich); Nassir Navab (Technical University of Munich); Shadi Albarqouni (ETH Zurich)

Federated Learning for Breast Density Classification: A Real-World Implementation, Holger R Roth (NVIDIA); Ken Chang (Harvard); Praveer Singh (Harvard); Wenqi Li (NVIDIA); Nir Neumark (); Vikash Gupta (OSU); Min Yun (Harvard); Evan Leibovitz (Partners); Bernardo Bizzo (MGH-BWH CCDS); Daguang Xu (NVIDIA Corporation); Jayashree Kalpathy-Cramer (MGH/Harvard Medical School); Alvin Ihsani (NVIDIA ); Yuhong Wen (NVIDIA); Varun Buch (MGH-BWH CCDS); Adam McCarthy (MGH-BWH CCDS); Katharina Hoebel (MIT); Jay Patel (MIT); Ittai Dayan (Partners); Ram Naidu (MGH & BWH Center for Clinical Data Science); Thomas Schultz (Partners); Prerna Dogra (NVIDIA); Andy Feng (NVIDIA); Yan Cheng (NVIDIA); Selnur Erdal (OSU); Richard White (OSU); Keith Dreyer (Partners); Felipe C Kitamura (Diagnósticos da América (DASA)); Meesam Shah (ACR); Laura Coombs (ACR); Ahmed Harouni (NVIDIA); Jeffrey Hawley (OSU); Miao Zhang (Stanford); Liangqiong Qu (Stanford University); Jesse Tetreault (Nvidia); Sharut Gupta (QTIM); Mona Flores (NVIDIA); Daniel Rubin (Stanford University); Jayashree Kalpathy-Cramer (Massachusetts General Hospital)

Federated Gradient Averaging for Multi-Site Training with Momentum-Based Optimizers, Samuel W Remedios (Johns Hopkins University); John Butman (National Institutes of Health); Bennett A Landman (Vanderbilt University); Dzung Pham (National Institutes of Health)

Automated Pancreas Segmentation Using Multi-institutional Collaborative Deep Learning, Chen Shen (Nagoya University); Pochuan Wang (National Taiwan University); Holger R Roth (NVIDIA); Dong Yang (NVIDIA Corporation); Daguang Xu (NVIDIA Corporation); Masahiro Oda (Nagoya University); Kazunari Misawa (Aichi Cancer Center); Po-Ting Chen (National Taiwan University Hospital); Wei-Chih Liao (National Taiwan University Hospital); Kao-Lang Liu (National Taiwan University Hospital); Weichung Wang (National Taiwan University); Kensaku Mori (Nagoya University)

Meet the Organising Team

Contact: nrieke(at)nvidia.com

Shadi Albarqouni

TUM/ETH Zürich

M. Jorge Cardoso

King’s College London

Wenqi Li

NVIDIA

Nicola Rieke

NVIDIA

Daguang Xu

NVIDIA

Spyridon Bakas

University of Pennsilvania

Bennett Landman

Vanderbilt University

Fausto Milletari

Verb Surgical

Holger Roth

NVIDIA

Program Commitee

Amir Alansary, Imperial College London

Reuben Dorent, KCL

Ralf Floca, DKFZ

Mark Graham, KCL

Jonny Hancox, NVIDIA Corporation

Yuankai Huo, Vanderbilt University

Klaus Kades, DKFZ

Jayashree Kalpathy-Cramer, MGH/Harvard Medical School

Sarthak Pati, University of Pennsylvania

G Anthony Reina, Intel Corporation

Daniel Rubin, Stanford University

Wojciech Samek, Fraunhofer HHI

Jonas Scherer, DKFZ

Micah J Sheller, Intel Corporation

Ziyue Xu, NVIDIA Corporation

Dong Yang, NVIDIA Corporation

Maximilian Zenk, DKFZ

Amir Alansary, Imperial College London

Reuben Dorent, KCL

Ralf Floca, DKFZ

Mark Graham, KCL

Jonny Hancox, NVIDIA Corporation

Yuankai Huo, Vanderbilt University

Klaus Kades, DKFZ

Jayashree Kalpathy-Cramer, MGH/Harvard Medical School

Sarthak Pati, University of Pennsylvania

G Anthony Reina, Intel Corporation

Daniel Rubin, Stanford University

Wojciech Samek, Fraunhofer HHI

Jonas Scherer, DKFZ

Micah J Sheller, Intel Corporation

Ziyue Xu, NVIDIA Corporation

Dong Yang, NVIDIA Corporation

Maximilian Zenk, DKFZ

Sponsor

NVIDIA sponsors a Titan RTX for the DCL best paper award.

Submission Guidelines

Format: Papers will be submitted electronically following Lecture Notes in Computer Science (LNCS) style of up to 8 + 2 pages (same as MICCAI 2020 format). Submissions exceeding page limit will be rejected without review. Latex style files can be found from Springer, which also contains Word instructions. The file format for submissions is Adobe Portable Document Format (PDF). Other formats will not be accepted.

Double Blind Review: DCL reviewing is double blind. Please review the Anonymity guidelines of MICCAI main conference, and confirm that the author field does not break anonymity.

Paper Submission: DCL uses the CMT system for online submission.

Supplemental Material: Supplemental material submission is optional, following same deadline as the main paper. Contents of the supplemental material would be referred to appropriately in the paper, while reviewers are not obliged to read them.

Submission Originality: Submissions should be original, no paper of substantially similar content should be under peer review or has been accepted for a publication elsewhere (conference/journal, not including archived work).

Proceedings: The proceedings of DCL 2020 will be published as part of the joint MICCAI Workshops proceedings with Springer (LNCS)